A Q&A with David Turner, Director, Agility3

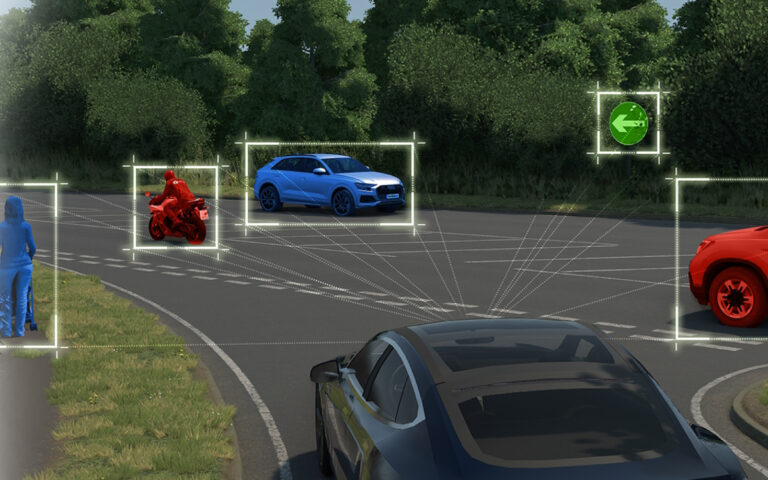

One of the most exciting industries Agility3 are involved with is driverless vehicle development. In this Q&A, Agility3 Director David Turner describes how our ultra-realistic visuals are helping to accelerate the development, reliability, and safety of driverless vehicles, by adding value to the simulation technologies used to train and test them.

Q: Can you introduce yourself and your role in the development of driverless vehicles?

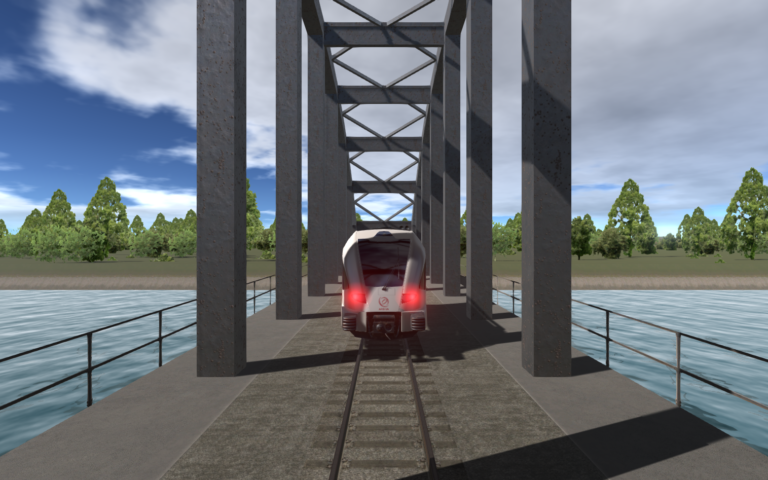

A: Hi, I’m David Turner, Director at Agility3. We specialise in top-quality simulation visuals and interactive Virtual Reality simulations for the testing and development of vehicles and future mobility systems. We develop geographically accurate 3D worlds, custom 3D environments, high quality 3D models and immersive tools and experiences. I’m primarily responsible for looking after our partners and customers, making sure everyone gets what they need when they need it.

Q: Why is simulation important in the development of driverless vehicles?

A: There are several reasons. First and foremost, we all want confidence that driverless vehicles will be safe, at least as safe as a competent human driver. Research suggests that a vehicle will need to drive over 10 billion miles to gather enough evidence to be able to demonstrate this with confidence. That simply isn’t possible.

So the solution is to drive virtual miles in a simulator. This can be done at faster than real-time speed, so the time and cost to test, update and retest is massively reduced. It’s also safer than testing in the real world, and enables the testing to focus on all the niche ‘edge cases’ that are hazardous and most challenging to driverless vehicles, rather than driving billions of miles along roads where nothing of interest happens.

Q: What do you provide specifically to support comprehensive testing of driverless vehicles?

A: We provide high-quality, bespoke virtual terrains for driving simulators. Typically, vehicle simulators require two distinct terrain models. Firstly, a visual 3D model that provides the inputs to the simulated sensors such as cameras or LiDAR. Secondly, a logical map, for example OpenDRIVE, which tells the simulator about the road network, rights of way, lane descriptions, speed limits and so on.

Between them, these two deliverables can also communicate information to the simulator about objects and what they’re made of, road surface geometry, and road surface materials.

We provide these specifically to enable scenarios that are essential for testing. That could mean, for example, a replica of a real stretch of public road built from an accurate LiDAR scan, enabling driverless vehicle developers to simulate, optimise, validate and de-risk in the virtual world ahead of going out into the real world. It could also mean a completely fictitious route that has been specifically designed to challenge vehicle perception models and decision-making systems.

We work with vehicle simulation toolset providers, providing terrains configured to work with their software. We also work with a broad range of formats and runtime rendering engines and can provide simulator terrains and real-time visuals in any combination of formats to do whatever is needed.

Q: How do you validate the accuracy of the terrains?

A: We take quality very seriously in our work. Everything we do is reviewed multiple times and independently tested before going out the door. The tools and techniques used for testing and validation vary depending on the requirements, but could involve visual comparison to overlaid scan data, using automated tools to compare measurements across a model, or simply trialling a broad range of scenarios to cover all use cases and observing the results.

But it’s also important to understand what accuracy really means in the context in question. Some environment models might need to be highly geographically accurate because the data is being used to teach navigation systems or validate algorithms.

Others might only need to be representative and give an impression of a certain type of environment. For some, the road surface might need to be accurately modelled including every ridge, pothole and camber but for other situations a simple flat cross section might be adequate. This is key in understanding how to deliver on the objectives at the desired cost.

One area we focus on is the correlation between different version of the terrain, for example the visual and logical map. Correlation here is essential, otherwise you can end up with vehicles that seem to float or misleading results because one system can see something that is hidden to another.

Q: How do you simulate adverse weather conditions, such as heavy rain, fog, or snow, and their impact on driverless vehicle performance?

A: We are in the fortunate position, partly driven by the games industry, that 3D rendering technologies such as ray-tracing and particle simulation are now widely available and, in parallel, the power of commercially available GPUs (Graphic Processing Units) has increased significantly, with

current GPUs being around 25 times faster than five years ago, enabling these technologies at runtime.

This means we can render scenes with a very realistic representation of weather and show the impact these have on vehicle sensors. The details of exactly how we do it, and the technologies involved depend on the end user requirements and the tools and technologies they are already using.

Suffice to say, games engines and rendering technologies such as Unreal or Omniverse provide the platform for implementing effects such as adverse weather conditions. Our role is to understand these latest technologies and know how to implement solutions using them to meet the goals of the end user.

Q: What do you see as the future of driverless vehicle technology, and how will simulation continue to play a role?

A: I see driverless vehicle technology playing a major role in future mobility and transportation. Several cities around the world already have networks of driverless vehicles, improving the availability and accessibility of transport. I believe this will grow in terms of both coverage and the degree of autonomy and sophistication, although I think the cultural challenges with driverless vehicles are as tough as the technological ones.

Driverless vehicles are not new to industrial sites where driverless forklifts, for example, have been around for a while. This is only going to grow with bigger and heavier driverless vehicles moving more and more goods over ever-increasing distances. I also expect to see increasing levels of autonomy in heavy industries such as construction and mining.

Simulation will continue to be a critical component in this evolution. It will enable designers and developers to iterate quicker, pushing the boundaries of what’s possible by testing new algorithms, sensors, and vehicle designs faster, for less money and more effectively.

Advances in AI and machine learning will enhance the capabilities of our simulations, bringing them ever closer to reality and reducing development time and costs.

Virtual Reality (VR) also has an important role to play. We’ve used VR in the past to help generate public interest in driverless vehicle trials and understand what it could be like to travel in a driverless vehicle. Coming back to the earlier point about cultural challenges, the public need confidence that a driverless vehicle service is safe, and that means more than just a proven technology. People want to know that a person somewhere is responsible for those vehicles transporting them or their loved ones.

Looking ahead, as driverless vehicles become more common over the next few years, I believe the role of a remote driver or remote assistant will become absolutely critical. We will have teams of people in control centres monitoring fleets of vehicles, with a potential need to intervene or provide support at any time.

These teams will need to be aware of what is happening at all times and there is huge potential for VR technology to enable organisations to ensure human factors such as fatigue are fully considered when developing such facilities.

The same technologies can also be used to optimise and validate operational processes, so that control rooms work more efficiently and more effectively, and to train staff to deal with challenging real-world situations and demonstrate that the risks in doing so have been properly managed.

Ultimately, simulation will continue to play a crucial role in ensuring the safety, reliability, and public trust in driverless vehicles.

For more information on Agility3’s 3D visualisation, modelling, and simulation solutions, please get in touch.